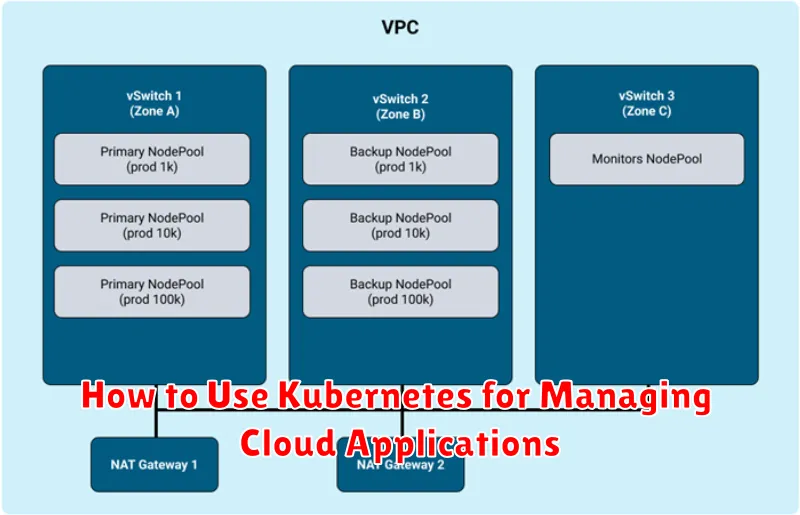

Kubernetes has emerged as the leading platform for managing cloud applications. Its robust orchestration capabilities allow for automated deployment, scaling, and management of containerized applications across diverse cloud environments. This comprehensive guide explores how to leverage Kubernetes to streamline your cloud application management, enhancing efficiency, scalability, and reliability. Learn the fundamental concepts and practical techniques for deploying, monitoring, and optimizing your cloud applications with Kubernetes, regardless of your experience level. Understanding how to effectively utilize Kubernetes is crucial for modern cloud-native development and operations. This article provides a clear path to mastering the essential skills required to effectively manage your cloud applications in today’s dynamic cloud landscape.

From deploying simple web applications to complex microservice architectures, Kubernetes provides the tools necessary for effective cloud application management. This guide will delve into the core components of Kubernetes, including pods, deployments, services, and namespaces, demonstrating their roles in managing cloud applications. We’ll explore the advantages of using Kubernetes, such as automated rollouts and rollbacks, self-healing capabilities, and resource optimization. You will discover how to harness the power of Kubernetes to simplify the complexities of cloud application management, achieving greater agility and scalability for your applications. This knowledge will empower you to confidently deploy and manage your cloud applications in a modern, containerized environment.

What Is Kubernetes and Why Use It?

Kubernetes, often abbreviated as K8s, is an open-source platform designed for automating the deployment, scaling, and management of containerized applications.

Instead of managing individual machines, Kubernetes allows you to cluster together groups of hosts running Linux or Windows, and provides you with a platform to easily deploy and run your applications across that cluster. It abstracts away the underlying infrastructure, offering a unified interface for application management.

Key benefits of using Kubernetes include simplified deployments, automated rollouts and rollbacks, self-healing capabilities, and efficient resource utilization. Kubernetes automates the process of distributing application containers across the cluster, ensuring high availability and resilience. If a container or node fails, Kubernetes automatically restarts or reschedules it, minimizing downtime.

Furthermore, Kubernetes provides scalability. You can easily scale your application up or down by adjusting the number of containers running. This allows you to adapt to changing demands and optimize resource consumption. Kubernetes also offers features like service discovery and load balancing, simplifying complex networking tasks.

Core Components of Kubernetes

Kubernetes is built upon several key components that work together to orchestrate and manage containerized applications. Understanding these core components is crucial for effectively leveraging Kubernetes.

The control plane is the brain of the Kubernetes cluster. It manages the overall state of the cluster and makes decisions about scheduling and resource allocation. Key components within the control plane include the API server, scheduler, controller manager, and etcd (a distributed key-value store for cluster data).

Nodes are the worker machines where containers are deployed and run. Each node contains a kubelet, which communicates with the control plane, and a container runtime (like Docker or containerd) responsible for managing the containers on that node. Pods are the smallest deployable units in Kubernetes and represent one or more containers that are deployed together on a node. They share resources and network namespaces.

Deploying Applications Using Pods and Services

In Kubernetes, applications are deployed and managed as Pods. A Pod is the smallest deployable unit in Kubernetes, representing a single instance of a running process. It can contain one or more containers, sharing resources like network and storage. Pods are ephemeral; if a pod fails, Kubernetes automatically replaces it. This ensures high availability and resilience.

While Pods provide the runtime environment, they lack a stable network identity. This is where Services come in. A Service acts as a stable, abstract way to access a set of Pods, providing a consistent IP address and DNS name. Even as Pods are created or destroyed due to scaling or failures, the Service remains constant, directing traffic to the healthy Pods.

There are several types of Services, each offering different ways to expose your application: ClusterIP (internal access only), NodePort (exposes the service on each node’s IP at a static port), LoadBalancer (utilizes a cloud provider’s load balancer), and ExternalName (maps a Service to an external DNS name).

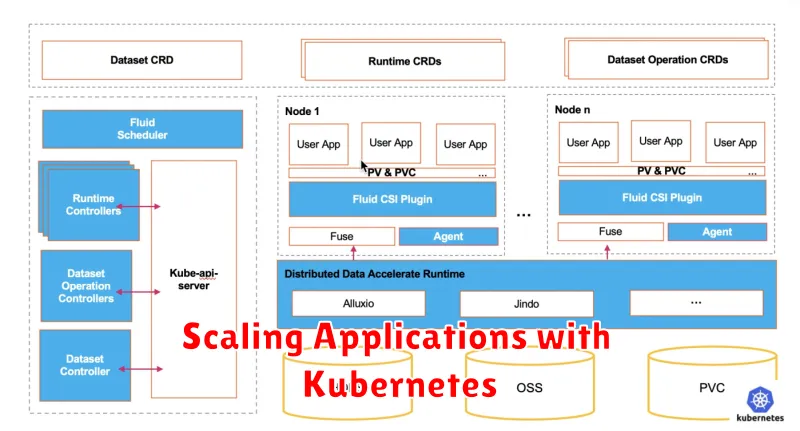

Scaling Applications with Kubernetes

Kubernetes excels at simplifying the scaling process for cloud applications. It allows you to easily adjust the number of running instances, known as pods, to meet varying demand. This dynamic scaling capability is crucial for ensuring application performance and resource optimization.

There are two primary scaling methods: horizontal and vertical. Horizontal scaling involves increasing or decreasing the number of pods running your application. Vertical scaling involves modifying the resources (CPU and memory) allocated to each pod. Kubernetes provides straightforward mechanisms for both approaches.

Horizontal Pod Autoscaler (HPA) automatically adjusts the number of pods based on observed CPU utilization, memory usage, or custom metrics. You define target utilization thresholds, and the HPA automatically scales your application to maintain those targets.

Vertical Pod Autoscaler (VPA) automatically adjusts the resource requests and limits for your pods based on historical resource usage patterns. This helps ensure your pods have the optimal resources allocated without manual intervention.

Managing Configurations and Secrets

In Kubernetes, managing configurations and secrets effectively is crucial for application stability and security. Configurations are parameters that define how your application behaves, such as database connection strings or feature flags. These should be treated as data, separate from your application’s code.

Kubernetes offers ConfigMaps for storing non-sensitive configuration data. These are stored as key-value pairs and can be mounted as volumes or environment variables within your pods. For sensitive data like passwords and API keys, Kubernetes provides Secrets. Secrets are similarly stored as key-value pairs but are encrypted at rest.

Best practices dictate that secrets should never be hardcoded into your application. Instead, reference them through environment variables or volumes mounted from Secrets. This approach enhances security by centralizing secret management and allowing for easier rotation and revocation without redeploying your application.

Leveraging these mechanisms helps ensure your application remains portable and adaptable to different environments while safeguarding sensitive information.

Monitoring and Logging Clusters

Monitoring and logging are crucial for maintaining the health and performance of your Kubernetes clusters. Effectively monitoring your clusters allows you to identify and address issues proactively, ensuring application availability and optimal resource utilization. Logging provides valuable insights into the behavior of your applications and infrastructure, aiding in debugging and performance analysis.

Kubernetes offers several built-in mechanisms for monitoring. The kube-state-metrics service exposes cluster-level metrics, providing information on resource usage and the status of various Kubernetes objects. Metrics-server gathers resource usage data from individual pods and nodes, enabling autoscaling and resource optimization.

For logging, Kubernetes doesn’t provide a built-in solution, but it supports integration with various logging tools. You’ll need to deploy a logging stack, which typically consists of a logging agent, a collector, and a storage backend. Popular choices include the Elasticsearch, Fluentd, and Kibana (EFK) stack and other similar solutions. These tools collect logs from your applications and system components, allowing you to search, analyze, and visualize log data.

By combining comprehensive monitoring and robust logging, you can gain valuable insights into your Kubernetes deployments, enabling proactive management and efficient troubleshooting.

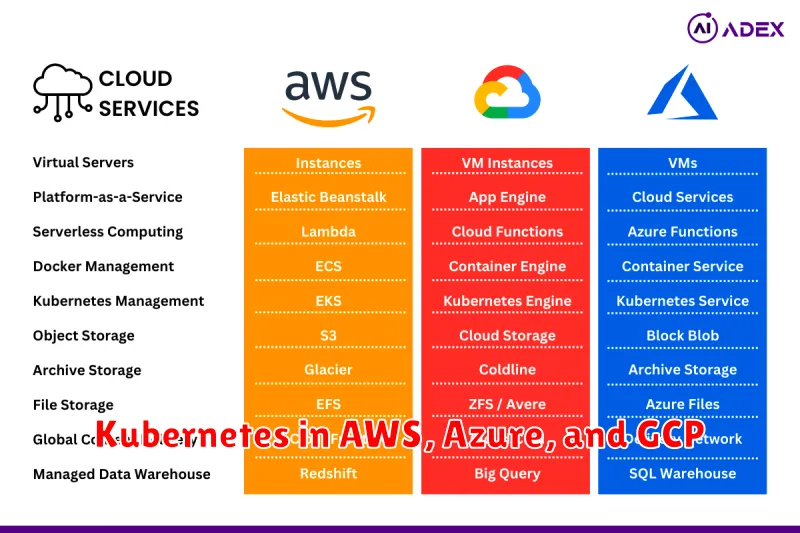

Kubernetes in AWS, Azure, and GCP

Each major cloud provider offers a managed Kubernetes service, simplifying deployment and management. These services abstract away much of the underlying infrastructure complexity, allowing you to focus on your applications.

Amazon Web Services (AWS) offers Elastic Kubernetes Service (EKS). EKS provides a managed control plane, automating tasks like upgrades and patching. You manage the worker nodes, giving you flexibility in instance types and scaling.

Microsoft Azure provides Azure Kubernetes Service (AKS). AKS is a fully managed service, handling both the control plane and worker nodes. This reduces operational overhead and simplifies cluster management.

Google Cloud Platform (GCP) offers Google Kubernetes Engine (GKE). GKE, being Google’s offering, benefits from close integration with other GCP services and boasts advanced features for networking and security. Like AKS, it offers various management levels, from fully managed to user-managed node pools.

Choosing the right provider depends on your specific needs and existing cloud infrastructure. Each service provides a robust and reliable platform for running Kubernetes clusters.