In today’s digitally driven world, ensuring the availability, performance, and scalability of online applications is paramount. Cloud load balancing plays a crucial role in achieving these goals. This article will delve into the essentials of cloud load balancing, explaining what it is, how it works, and why it is so vital for businesses of all sizes operating in the cloud. We will explore the core concepts behind load balancers, their various types, and the significant benefits they bring to modern application architectures. Understanding cloud load balancing is essential for anyone involved in developing, deploying, or managing cloud-based applications.

Cloud load balancing efficiently distributes incoming network traffic across multiple servers. This distribution prevents any single server from becoming overloaded, ensuring high availability and optimal application performance. By efficiently managing traffic flow, cloud load balancing minimizes latency, maximizes throughput, and provides fault tolerance. This article will examine the different algorithms used in load balancing, the various service offerings from major cloud providers, and the best practices for implementing a robust and effective cloud load balancing strategy. This knowledge is essential for building resilient, scalable, and performant applications in the cloud.

What Is Load Balancing in the Cloud?

Cloud load balancing efficiently distributes incoming network traffic across multiple servers in a cloud environment. This prevents any single server from becoming overloaded, ensuring high availability and responsiveness for applications and websites.

Instead of directing all requests to one server, a load balancer acts as a “traffic cop,” intelligently forwarding requests to different servers based on factors like server health, current load, and geographic location. This optimizes resource utilization and prevents bottlenecks.

Imagine a popular online store during a flash sale. Without load balancing, a sudden surge in traffic could overwhelm a single server, leading to slowdowns or even crashes. With load balancing, the traffic is spread across multiple servers, ensuring that customers can continue shopping seamlessly.

Load balancing is a crucial component of cloud computing, enabling businesses to scale their applications dynamically and provide a consistently positive user experience. It contributes significantly to fault tolerance, as if one server fails, the load balancer automatically redirects traffic to the remaining healthy servers, minimizing downtime.

Types of Load Balancers (HTTP, TCP, UDP)

Load balancers distribute incoming traffic across multiple servers, preventing overload and ensuring application availability. They operate at different layers of the network stack, impacting their functionality and suitability for various applications. The most common types are HTTP, TCP, and UDP load balancers.

HTTP load balancers operate at Layer 7 (Application Layer). They understand HTTP protocol specifics, allowing them to make routing decisions based on factors like URL, headers, and cookies. This enables advanced features like content-based routing and session persistence.

TCP load balancers function at Layer 4 (Transport Layer). They distribute TCP connections without inspecting the actual data. This offers good performance and is suitable for applications requiring persistent connections, such as databases and messaging systems.

UDP load balancers also work at Layer 4, but handle UDP traffic. UDP is a connectionless protocol, commonly used for real-time applications like streaming and gaming. UDP load balancers focus on speed and efficient distribution of these datagrams.

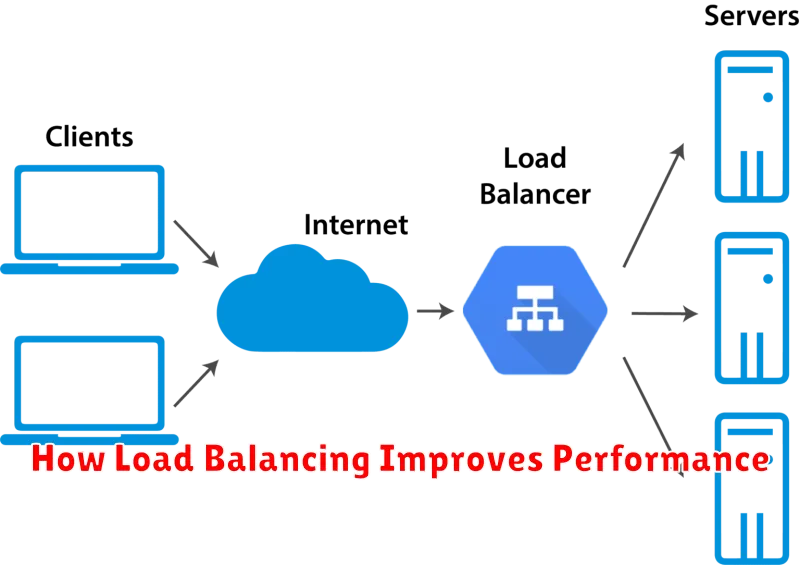

How Load Balancing Improves Performance

Load balancing significantly enhances performance by distributing incoming traffic across multiple servers. This prevents any single server from becoming overloaded, which can lead to slow response times and even crashes. By spreading the workload, load balancing ensures that each server operates within its optimal capacity.

This efficient distribution of traffic results in faster response times for end-users. Reduced latency leads to a better user experience, especially during peak traffic periods. Improved resource utilization is another key benefit. Instead of relying on a single, potentially powerful (and expensive) server, load balancing allows for scaling across multiple, less powerful machines. This can result in cost savings while maintaining, or even improving, performance.

Furthermore, load balancing increases availability. If one server fails, the load balancer automatically redirects traffic to the remaining healthy servers. This redundancy minimizes downtime and ensures continuous service availability for users, a critical factor for businesses reliant on online operations. Improved fault tolerance like this contributes to a more robust and reliable infrastructure.

Popular Cloud Load Balancing Services

Several cloud providers offer robust load balancing solutions. Choosing the right one depends on specific needs and existing infrastructure. Here are a few popular choices:

Amazon Web Services (AWS) offers a suite of load balancing services, including the Elastic Load Balancing (ELB). ELB supports various types of load balancing, such as application, network, and gateway load balancing. This allows for flexibility in distributing traffic across different types of resources.

Google Cloud Platform (GCP) provides its Cloud Load Balancing service. This offering supports global HTTP(S) load balancing, as well as internal and regional load balancing for TCP/UDP traffic. It’s well-integrated with other GCP services.

Microsoft Azure offers the Azure Load Balancer. This service provides layer 4 (transport) and layer 7 (application) load balancing capabilities. It supports various health probes and traffic distribution algorithms.

Configuring Load Balancers for High Availability

Configuring load balancers for high availability involves strategically distributing incoming traffic across multiple servers. This redundancy minimizes the impact of server failures, ensuring continuous service availability. A key aspect of this configuration is choosing the right algorithm for distributing traffic. Common algorithms include round robin, least connections, and IP hash. Round robin distributes traffic sequentially, while least connections directs traffic to the server with the fewest active connections.

Health checks are essential for monitoring the status of backend servers. The load balancer periodically probes these servers to determine their availability. If a server fails a health check, it’s automatically removed from the pool, preventing traffic from being directed to a non-responsive server. The frequency and type of health check should be tailored to the specific application and environment.

Furthermore, employing multiple load balancers enhances redundancy. This architecture involves a primary load balancer distributing traffic, with a secondary load balancer on standby. If the primary load balancer fails, the secondary one automatically takes over, ensuring uninterrupted service. This configuration is crucial for mission-critical applications requiring maximum uptime.

Monitoring Traffic and Failover

Monitoring the health and performance of your load balanced applications is crucial. Real-time insights into traffic flow, server utilization, and response times allow for proactive management and optimization. This visibility helps identify bottlenecks, anticipate scaling needs, and ensure consistent application performance.

Failover capabilities are integral to load balancing. Should a server become unavailable due to maintenance or an unforeseen outage, the load balancer automatically redirects traffic to the remaining healthy servers. This seamless transition minimizes disruption to users and maintains business continuity.

Sophisticated load balancing solutions provide automated health checks that constantly probe server responsiveness. If a server fails to respond, it is removed from the pool, preventing traffic from being directed to a malfunctioning instance. This dynamic responsiveness enhances reliability and provides a resilient infrastructure.

Common Mistakes to Avoid

Implementing cloud load balancing effectively requires careful planning and execution. Overlooking key aspects can lead to suboptimal performance and negate the benefits. One common mistake is improper configuration of the load balancer itself. This includes choosing the wrong algorithm for distributing traffic or failing to adequately provision resources for the load balancer to handle peak loads.

Another frequent oversight is neglecting health checks. Without regular health checks, the load balancer may continue directing traffic to unhealthy instances, resulting in errors and downtime. Insufficient monitoring is also problematic. Failing to track key metrics, such as server response times and error rates, can make it difficult to identify performance bottlenecks and optimize the load balancing strategy.

Finally, lack of scalability can hinder the effectiveness of load balancing. As traffic grows, the infrastructure must be able to scale accordingly. This includes both the load balancer itself and the underlying server instances. Failure to plan for scalability can lead to overload and service disruptions.